Netvisor Analytics: Secure the Network/Infrastructure

We recently heard President Obama declare cyber security as one of his top priorities and we saw in recent time major corporations suffer tremendously from breaches and attacks. The most notable one is the breach at Anthem. For those who are still unaware, Anthem is the umbrella company that runs Blue Shield and Blue Cross Insurance as well. The attackers had access to people details, social security, home addresses, and email address for a period of month. What was taken and extent of the damage is still guesswork because network is a black hole that needs extensive tools to figure out what is happening or what happened. This also means the my family is impacted and since we use Blue Shield at Pluribus Networks, every employee and their family is also impacted prompting me to write this blog and a open invitation to the Anthem people and the government to pay attention to the new architecture that makes network play a role similar to NSA in helping protect the infrastructure. It all starts with converting the network from a black hole to something we can measure and monitor. To make this meaningful, lets look at state of the art today and why it is of no use and a step-by-step example on how Netvisor analytics help you see everything and take action on it.

Issues with existing networks and modern attack vector

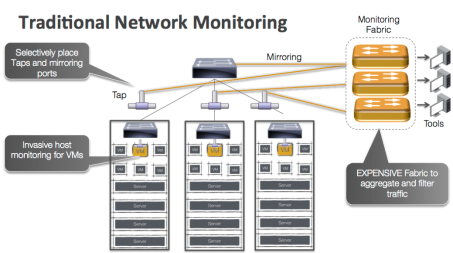

In a typical datacenter or enterprise, the typical switches and routers are dumb packet switching devices. They switch billions of packets per second between servers and clients at sub micro second latencies using very fast ASICs but have no capability to record anything. As such, external optical TAPs and monitoring networks have to be built to get a sense of what is actually going on in the infrastructure. The figure below shows what monitoring today looks like:

This is where the challenges start coming together. The typical enterprise and datacenter network that connects the servers is running at 10/40Gbps today and moving to 100Gbps tomorrow. These switches have typically 40-50 servers connected to them pumping traffic at 10Gbps. There are 3 possibilities to see everything that is going on:

- Provision a fiber optics tap at every link and divert a copy of every packet to the monitoring tools. Since the fiber optics tap and passive, you have to copy every packet and the monitoring tools need to deal with 500M to 1B packets per second from each switch. Assume a typical pod of 15-20 rack and 30-40 switches (who runs without HA), the monitoring tools need to deal with 15B to 40B packets per second. The monitoring Software has to look inside each packet and potentially keep state to understand what is going on which requires very complex software and amazing amount of hardware. For reference, a typical high-end dual socket server can get 15-40M packets into the system but has no CPU left to do anything else. We will need 1000 such servers plus associated monitoring network apart from monitoring software so we are looking at 15-20 racks of just monitoring equipment. Add the monitoring software and storage etc, the cost of monitoring 15-20 racks of servers is probably 100 times more then the servers itself.

- Selectively place fiber optic taps at uplinks or edge ports gets us back into inner network becoming a black hole and we have no visibility into what is going on. Key things we learnt from NSA and Homeland security is that a successful defense against attack requires extensive monitoring and you just can’t monitor the edge.

- Using the switch them selves to selectively mirror traffic to monitoring tools. A more popular approach these days but this is built of sampling where the sampling rates are typically 1 in 5000 to 10000 packets. Better then nothing but monitoring software has nowhere close to meaningful visibility and cost goes up exponentially as more switches get monitored (monitoring fabric needs more capacity, the monitoring software gets more complex and needs more hardware resources).

So what is wrong with just sampling and monitoring/securing the edge. The answer is pretty obvious. We do that today yet the break in keeps happening. There are many things contributing to it starting from the attack vector itself has shifted. Its not that employees in these companies have become careless but more to do with the myriad software and applications becoming prevalent in a enterprise or a datacenter. Just look at the amount of software and new applications that gets deployed everyday from so many sources and the increasing hardware capacity underneath. Any of these can get exploited to let the attackers in. Once the attackers has access to inside, the attack on actual critical servers and applications come from within. Lot of these platform and application goes home with employees at night where they are not protected by corporate firewalls and can easily upload data collected during the day (assuming the corporate firewall managed to block any connections). Every home is online today and most devices are constantly on network so attackers typically have easy access to devices at home and the same devices go to work behind the corporate firewalls.

Netvisor provides the distributed security/monitoring architecture

The goal of Netvisor is to make a switch programmable like a server. Netvisor leverages the new breed of Open Compute Switches by memory mapping the switch chip into the kernel over PCI-Express and taking advantage of powerful control processors, large amounts of memory, and storage built into the switch chassis. Figure below contrasts Netvisor on a Server-Switch using the current generation of switch chips with a traditional switch where the OS runs on a low powered control processor and low speed busses.

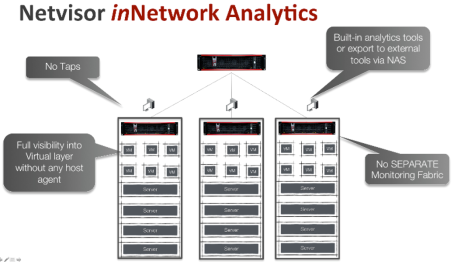

Given that cheapest form of compute these days is a Intel Rangeley class processor with 8-16Gb memory, all the ODM switches are using that as a compute complex. Facebook’s Open Compute Program made this a standard allowing all modern switches to have a small server inside them that lays the foundation of our distributed analytics architecture on the switches without requiring any TAPs and separate monitoring network as shown in the Figure below.

Given that cheapest form of compute these days is a Intel Rangeley class processor with 8-16Gb memory, all the ODM switches are using that as a compute complex. Facebook’s Open Compute Program made this a standard allowing all modern switches to have a small server inside them that lays the foundation of our distributed analytics architecture on the switches without requiring any TAPs and separate monitoring network as shown in the Figure below.

Each Server-Switch now becomes in network analytics engine along with doing layer 2 switching and layer 3 routing. Netvisor analytics architecture takes advantage of following:

Each Server-Switch now becomes in network analytics engine along with doing layer 2 switching and layer 3 routing. Netvisor analytics architecture takes advantage of following:

- TCAM on switch chip that gives it the ability to identify a flow and take a copy of the packet (without impacting the timing of original packet) at zero cost (including TCP control packet and various network control packets)

- High performance, multi-threaded control plane over PCIe that can get 8-10Gbps of flow traffic into Netvisor kernel

- Intel Rangeley class CPU which is quad core and 8-16Gb of memory to process the flow traffic

So Netvisor can filter the appropriate packets in switch TCAM while switching 1.2 to 1.8Tbps of traffic at line rate and process millions of hardware filtered flows in software to keep state of millions of connection in switch memory. As such, each switch in the fabric becomes a network DVR or Time machine and records every application and VM flow it sees. With a Server-Switch with Intel Rangeley class processor, 16Gb of memory, each Netvisor instance is capable of tracking 8-10million application flows at any given time. These Server-Switches have a list price of under $20k from Pluribus Networks and are cheaper then your typical switch that just does dumb packet switching.

While the servers have to be connected to the network to provide service (you can’t just block all traffic to the servers), the Netvisor on switch can be configured to not allow any connections into it control plane (only access via monitors) or from selected client only and much easier to defend against attack and provide a uncompromised view of infrastructure that is not impacted even when servers get broken into.

Live Example of Netvisor Analytics (detect attack/take action via vflow)

The Analytics application on Netvisor is a Big Data application where each Server-Switch collects millions of records and when a user runs query from any instance, the data is collected from

Each Server-Switch and presented in coherent manner. The user has full scripting support along with REST, C, and Java APIs to extract the information in whatever format he wants and exports it to any application for further analysis.

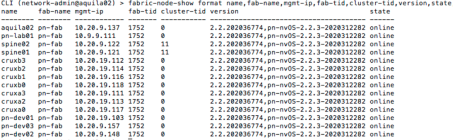

We can look at some live example form Pluribus Networks internal network that uses Netvisor based fabric to meet all its network, analytics, security and services needs. The fabric consists of following switches

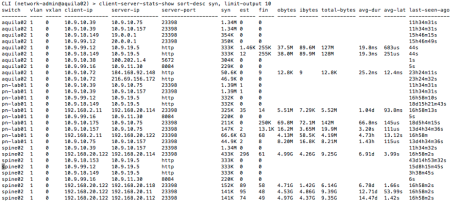

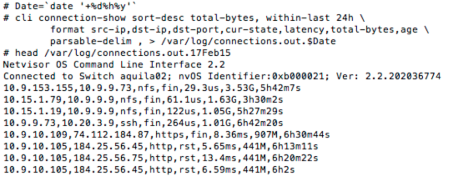

To look at top 10 client-server pair based on highest rate of TCP SYN is available using following query

Seems like IP address 10.9.10.39 is literally DDOS’ing server 10.9.10.75. That is very interesting. But before digging into that, lets look at which client-server pairs are most active at the moment. So instead of sorting on SYN, we sort on EST (for established) and limit the output to top 10 entries per switch (keep in mind each switch has millions of records that goes back days and months.

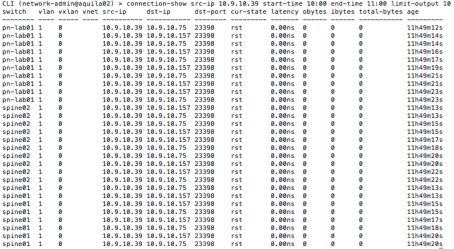

It appears that IP address which had a very high SYN rate do not show up in established list at all. Since the failed SYN showed up approx. 11h ago (around 10.30am today morning) so lets look at all the connection with src-ip being 10.9.10.39

This shows that not a single connection was successfully established. For sanity sake, lets look at the top connections in terms of total bytes during the same period

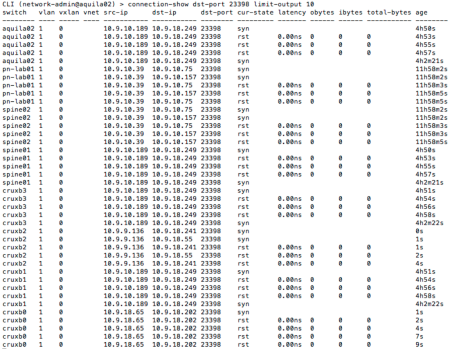

So the mystery deepens. The dst-port in question was 23398 which is not a well known port. So lets look at the same connection signature. Easiest is to look at all connections with destination port 23398.

It appears that multiple clients have the same signature. Obviously we dig in deeper without limiting any output and look at this from many angles. After some investigation, it appears that this is not a legitimate application and no developer in Pluribus owns these particular IP addresses. Our port analytics showed that these IP belong to Virtual Machines that were all created few days back around same time. The prudent thing is to block this port all together across entire fabric quickly using the vflow API

![]() It is worth noting that we used the scope fabric to create this flow with action drop to block it across the entire network (on every switch). We could have used a different flow action to look at this flow live or record all traffic matching this flow across the network.

It is worth noting that we used the scope fabric to create this flow with action drop to block it across the entire network (on every switch). We could have used a different flow action to look at this flow live or record all traffic matching this flow across the network.

Outlier Analysis

Given that Netvisor Analytics is not statistical sample and accurately represent every single session between the servers and/or Virtual Machines, most customer have some form of scripting and logging mechanism that they deploy to collect this information. The example below shows the information person is really interested in by selecting the columns he wants to see

The same command is run from a cron job every night at mid night via a script with a parse able delimiter of choice that gets recorded in flat files and moved to different location.

The same command is run from a cron job every night at mid night via a script with a parse able delimiter of choice that gets recorded in flat files and moved to different location.

Another script can actually record all destination IP address and sort them and compares them from the previous day to see which new IP address showed up in outbound list and similarly for inbound list. The IP addresses where both source and destination were local are ignored but IP addresses where either is outside and fed into other tool which keep track of quality of the IP address against attacker databases. Anything suspicious is flagged immediately. Similar scripts are used for compliance to ensure there was no attempt to connect outside of legal services or servers didn’t issue outbound connection to employees laptops (to detect malware).

Another script can actually record all destination IP address and sort them and compares them from the previous day to see which new IP address showed up in outbound list and similarly for inbound list. The IP addresses where both source and destination were local are ignored but IP addresses where either is outside and fed into other tool which keep track of quality of the IP address against attacker databases. Anything suspicious is flagged immediately. Similar scripts are used for compliance to ensure there was no attempt to connect outside of legal services or servers didn’t issue outbound connection to employees laptops (to detect malware).

Summary

More investigations later showed that we didn’t had a intruder in our live example but one of the developer had created bunch of virtual machines cloning some disk image which had this application which was misbehaving. Still unclear where it found the server ip address from but things like this and actual attacks have happened in past at Pluribus Networks and Netvisor analytics helps us track and take action. The network is not a black hole but shows the weakness of our application running on servers and virtual machines.

The description of scripts in outlier analysis is deliberately vague since it relates to a customer security procedure but are building more sophisticated analysis engines to detect anomalies in real time against normal behavior.

Netvisor Takes SDN Switching Mainstream with $50M Series D

We closed our Series D in financing right before Christmas. This is a $50M round lead by Temasek and Ericsson. Temasek is a $170B plus sovereign fund out of Singapore that is best described as Berkshire Hathaway of Technology. They were the people responsible forinvestments into Alibaba. This is important to understand that with Netvisor achieving success in Enterprise Datacenter and Private Cloud markets, the bigger players now believe that SDN switching and applications on Server-Switches is pretty real.

The finding is primarily to scale our business side and help sell more products, build support infrastructure and create a application group that can write more applications on Netvisor to exploit the world of programmable networks.

Netvisor as an Application Platform

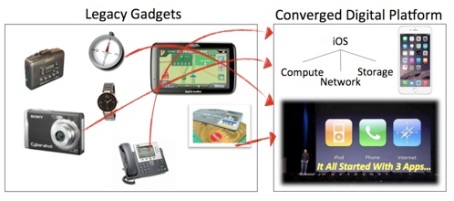

The best way to explain this is to draw a parallel between Netvisor as a switch Hypervisor and Smartphone.

When Apple released a IOS based smartphone, the world was full of small hardware devices like camera, GPS navigators etc. IOS (and later Android) become a software platform that allowed many applications to come of top of this platform.

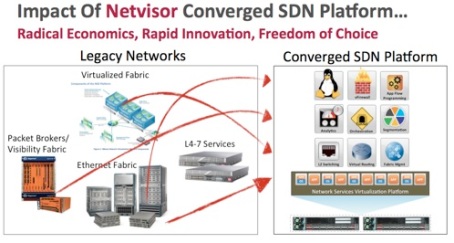

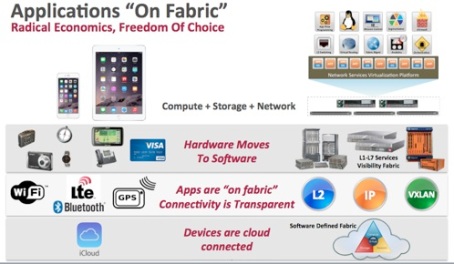

Netvisor is creating the same paradigm for datacenter switching. Today, you have a physical fabric, a separate Observability fabric (using TAPS and probes which are more expensive then physical network), Hardware appliances for services and a separate overlay networks with their controller appliances. Since Netvisor is a Distributed Bare metal Switch OS, it allows all these functionality to come in as Software application on top of Netvisor.

Applications are always “On Fabric”

Continuing to draw the parallel with the world of smartphone, we can see that a whole new generation of applications got enabled because of the Apple IOS platform. The application write could write application assuming connectivity all the time without understanding the 3G/4G/LTE/bluetooth/Wifi protocol. More importantly, his application doesn’t have to deliver the necessary protocol stack to provide the connectivity. Netvisor Cluster-Fabric provides the same functionality to the application developers. The physical Fabric can be Layer 2, Layer 3 or Tunnel based (and contain any Networking Hardware in between). The applications are always “On Fabric” and don’t have to understand the physical topology opf the Network or how the Network is connected.

The Fabric is constructed using Layer2/3/vxlan via Netvisor cli/UI and applications use Netvisor C/Java/Rest APIs and Fabric abastractions to program the network, get the analytics, or provide the services.

Open Fabric Virtualization – Unify the Overlay and Underlay

We always believed in a data and control plane separation but we always kept the real world in mind. That is why Netvisor was always a bare metal distributed control plane and we documented several years back that centralized control planes will not scale (see https://sunaytripathi.wordpress.com/2012/07/31/how-does-openflow-sdn-help-virtualizationcloud-part-3-of-3-why-nicira-had-to-do-a-deal/).

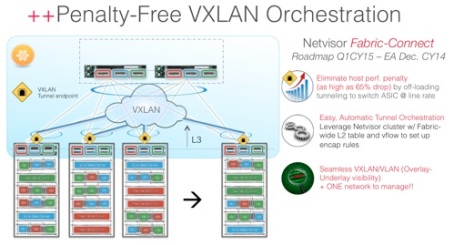

While our approach of doing bare metal control plane (separate from data plane) is getting validated we see bulk of the industry again going on a tangent with a separate overlap and underlay networks. That

is a absolute wrong direction to take and complicates the server as well as network with no visibility into what is going on. We are currently starting Beta test of Netvisor Open Fabric Virtualization where we allow the tunnels to move to switches and the encapsulation rules are created to switch hardware on demand. When a VM wants to talk to another VM, Netvisor gets the ARP packet (or a L2 miss) and automatically creates encapsulation rule using its vflow API. This allows the servers to go back to being Linux/KVM based servers without worrying about tunnels and network topology and Netvisor based switches to deal with tunnel tables the same way it deals with switching tables and routing tables.

Summary

The year 2015 is going to be a interesting year where SDN is reaching a tipping point and we will see large scale deployments and Networking will finally arrive in 21st century.

Netvisor powers the Rackscale Architecture from Intel/Supermicro

On May 5th, 2014, we announced that Pluribus Networks Netvisor is now powering the switch blades on the new Intel blade chassis announced by Supermicro Inc. Its creating quite a stir and is a proud moment for everyone at Pluribus Networks and Supermicro who made this possible.

There are several reasons why Netvisor is the ideal Hypervisor to power the switching blades:

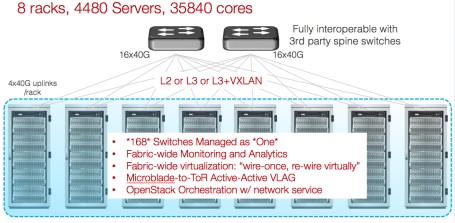

- Integrated Openstack Controller with Horizon and REST APIs as the only management that is needed – The entire Netvisor cluster-fabric and the virtual/physical switching on the compute blades is exported to Openstack via neutron plugins and extensions. Our Freedom series Server-Switches also bundle the full Openstack controller allowing the entire rack of microblades to be managed as one unit via Openstack Horizon GUI. For people wanting to manage the network layer via traditional tools, Netvisor also offers a full featured cli to manage teh cluster-fabric along with high performance and multithreaded native C and Java APIs. Netvisor also provides multiple virtualized services with H/W offload. So services like NAT, DNS/DHCP, IP-Pools, Routing, Load balancing, etc are integrated via Openstack Horizon to support multi-tenancy at scale.

- Netvisor is a Distributed Plug and Play Hypervisor – The Supermicro blade chassis has 4 switch blades and 14 server blades. This is where the racks are going with micro servers etc. In a typical rack, you will have 7 such chassis giving you 98 server blades and 28 switch blades plus two top of the rack switches. An ideal platform for Rackscale computing that HPC and Private Cloud needs. In such architecture, you can’t deal with individual switches. Netvisor provides a full plug-and-play architecture with zero touch provisioning and entire switching infrastructure appears as one cluster-fabric with synchronized state and configuration sharing with no cabling mess. The entire cluster-fabric protocol and algorithm is based on TCP/IP with no special ASIC and H/W.

- Application level Analytics and Debuggability – Netvisor has in built support for looking at all physical servers, virtual machines and applications without needing any probes or agent software. The addition of Freedom series as Top of the Rack layer allows users to track millions or VMs and application level flows in real time as well as historical data. Helps with capacity planning, audit, congestion analytics, VM life cycle management, application level performance and debuggability analysis. In multi-tenancy enviroments, Netvisor allows each individual tenant to analyze its own VM, applications, and Services.

- Netvisor powers all types of switching platforms – Just the way server Hypervisor are now ubiquitously running from laptops to desktops to high end servers, Netvisor also supports Micro switch blades to the ODM Top of the Rack switches and more powerful Server-Switches.

- Netvisor runs on every possible control plane – On the Supermicro switch blades, Netvisor runs on a Avaton based control processor with 8Gb of memory and limited Flash storage. On other switches, it uses single to dual socket Xeon with 512Gb RAM and Fusion-io based storage.

- Netvisor is full Open – It runs on all open platforms is built from best of the breed Open source OS with addition for switching, Cluster-Fabric, and switch Virtualization. Run it as traditional switch mode or with your users choice of Linux (Ubuntu and Fedora by default).

Use Netvisor with Openstack on Supermicro’s blade chassis along with the Freedom series Top of the Rack to run the most dense and power efficient Rackscale Architecture today.

The Battle for the Top of the Rack

The Battlefield between Sysadmin and Netadmin

The fight for control between sysadmin and network admin has been going on for decades but the boundary line had been pretty static. Anything that ran a full OS and was a end node was is a server is under server ops while anything that connected the servers together was a network device and was under the control of network operations.

If you look at the progression of the two side through the last two decades, you will realize that the server and server OS have gone through change after change with new software packaging system, virtualization, density of servers per rack, and so on while the networking technology has remained pretty static other than speed and feeds and some tagging protocols. While the server admin kept reinventing himself through open source, virtualization, six nine uptime, the network got split into three distinct category (forgive me Gartner for gross simplification):

- The Datacenter Networking: The heavy lifting being done by the server ops and running applications and virtual machine the most critical need, the network admin tended to come in the way and exerted control via IP address and VLAN management. The network services which used to be important are also becoming software based and open source and fast becoming the domain of server ops. The server operations is looking for network to provide value, analytics, debuggability, instant scale, threat analysis and virtualized services which the network operations wasn’t trained to provide and hence the relationship between the two organizations has increasingly soured.

- The Telecommunications Networking: The Network admin had to deal with increased complexity and is becoming very specialized where often Ph.D.s are deployed to ensure the complex wide area network continues to work. The security and service level agreements were paramount. In this scenario, the network operation are doing the heavy lifting.

- The Campus and Enterprise Network: Used to be a server operations dominated world but as each student is getting 5 IP devices and wants a gig+ B/W for his social and gaming needs, the security, analytics, and managability needs are increasing.

Is the future bleak for Network Operations in Datacenter and Campus?

The network is becoming more challenging is the fast moving world of servers, virtualization, threats, need for instant information, and instant scale. The network operations in Datacenter and Campus networking have not upgraded their skill sets resulting in SDN and keep the network dumb initiatives. Now most of these initiatives lack the deployment scale but Pluribus Netvisor is a full fledged open source Network OS which is designed to make the Top of the Rack another server. Given my past experiences with virtual switching,

the Netvisor is running on the Top of the Rack Server-Switch and gives the ability to seamlessly blend and control the virtual and physical network and orchestrate it as one. Our philosophy is similar to Cisco that the Top of the Rack switch is too powerful to ignore but one needs a full Network OS (aka Netvisor) to unleash the power of the top of the rack switch. With the full Unix programmability and C,Java,Perl,Phython interfaces, the existing server tool chain can now program the network as well. Netvisor running on the Server-Switch and working in conjunction with Openstack and VMware NSX style orchestration can really solve the major pain point.

So one would naturally assume the Netvisor running on F64 and the new whiteboxes will become the domain of Server Operations and the Rack will be fully owned by sysadmin and controlled with the same management paradigm and programmed with the server style tool chain (gcc/gdb)!! And a large segment of our customer base is proving the point. But we are also seeing a smaller segment of savvy network admins at our door steps who want to embrace this new paradigm. Pluribus gives a welcome adjunct to VXLAN style server overlays that case for only low-feature networks that do nothing more than push packets. While talking to these guys, we are providing a management interface that provides the network admins a bridge to the new world where the network became a differentiator and adds huge value to the server and application infrastructure but working as one.

The changes needed in Network Operations to be successful

During our talks with the Network Ops side of the house we see a very distinct patterns. A smaller set of network admins in fast moving companies and cloud providers (not all of them run like Google) very quickly realize that using Pluribus Netvisor and Server-Switches, they can have instant view of their server and application infrastructure, give more control to the application guys (and make their clients happy), help them debug performance and security issues, create virtual network that mirror a physical network because they have control over both. There is a larger set of network admins that is realizing that they need to embrace this change if they want to stay relevant and probably told by their CxO that they need to cut costs and add value. We, as in Pluribus Networks would really like to understand the needs to this set of people so we can tweak the management plane to be more comfortable to this set so they can focus on adding differentiation and value instead of worrying about how they configure VXLAN.

For the infrastructure to grow, we can probably take the easy path (which is already happening) and deliver the Top of the Rack to Server Operations but the reality is that the server admin is already very loaded and would love it if his network counter part can help him. Guys, we would love hear your thoughts on the fast changing world of Top of the Rack Switch. Any concrete examples from both network and server operations would be very useful. You can leave comments on this blog or send me direct emails.

Crossbow on Big F#@!ing Webtone Switch

Back in the days of SUN Microsystem, Scott McNealy asked us to build a big F#@!ing Webtone Switch. At that time, the underlying pieces weren’t there but over last few years the possibilities have opened up. We now have the switch chips from Broadcom and Intel that switch at 1.2Tbps in H/W. From a OS view, 1.2 Tbps of switching at 300ns latency is great but the more amazing thing is PCIe as a control plane which allows 20-40Gbps of control plane B/W where you can change switch registers, L2/L3-tables, TCAMs, etc at nano-second rates.

So after more than three years of work and million lines of C code, the Pluribus Network’s engineering team has the switch chip under Crossbow control. For people who are not sure what I am talking about, in 2005 project Crossbow invented virtual switching inside a server hypervisor and introduced hardware based Virtual NICs and dynamic polling to get 40Gbps of bandwidth through a server OS. The details were published in “Crossbow: From Hardware Virtualized NICs to Virtualized Networks” in ACM Sigcomm VISA 09.

In the goal to benefit from merchent silicon ecosystem and orchestrate the entire infrastructure using Open source OS on switches, the industry has been going on suboptimal paths. The most notable efforts around a centralized controller can barely deal with the scale of single switch and typically requires sending a packet to a controller running on a separate server. The latency of these transactions (typically in milliseconds to seconds) defeats the required reaction time in microseconds in virtualized environments where Network resources are shared. The other approach of just throwing the Intel or Broadcom SDK on a whitebox switch with Linux and Quagga doesn’t really solve the control plane problem. The Broadcom and Intel SDK are crafted for their specific switch chips and meant for configuration ease and not for high speed control plane software.

By bringing the Crossbow Architecture on the switch chip where it is part of the Network OS directly controlling the switch chip via the PCIe allowsus to get following benefit:

- Integrated Switch Hardware with fully programmable Control Plane allowing the performance and scale necessary to deal with 10Gbps switches (the distributed control plane is part of the Network OS running on the switch itself).

- Enable applications like DDoS, IDS, Firewall, Load Balancer, routing, messaging, etc that need to be in network to run on the switch itself and benefit from the H/W offload, high speed snooping, and flow capability that switch chip offers via C, Java, Perl, Python, etc programming interfaces in UNIX/Linux environment. Development, Deployment and Resource provisioning of these applications on Crossbow enabled switches is same as current server mechanisms and uses the existing tool chain (gcc/gdb, kvm, etc).

- Bring the benefit of merchent silicon ecosystem on switches under Openstack control enabling faster pace of innovation and cost advantages.

As we get ready to roll Netvisor (and its open source version – openNetvisor) out, I will discuss more details on this blog in near future.

Netvisor and iTOR Unvieled

After a long wait, we finally unveiled stage 1 of the big solution – the Netvisor and our intelligent Top of the Rack (iTOR) switch. If you haven’t had a chance to see, you can read about it here. At this point, we have enough boxes on the way that we can open the beta to slightly larger audience. Some more details about the hardware – it has 48 10gigabit ethernet ports which can take a sfp+ optical module, sfp+ direct attach or a 1gigbit RJ45 module along with 4x40gigabit qsfp ports. The Network Hypervisor controlling one or more iTOR is a full fledged Operating System and amongst other things capable of running your hardest applications. Comes with all tools like gcc/gdb/perl already there and you can load anything else that is not there. Why you may ask – if you always had an application that needed to be in the network, now it truly can be on the network. Imagining doing your physical or virtual load balancers, proxy servers, monitoring engines, IDS systems, SPAM filters, running on our network hypervisor where they are truly in the network without needing anything to plug in. Create you virtual networks along with virtual appliances in seconds, snapshot it, clone it and bring it back up in seconds. This is SDN ready for immediate deployment.

Intrigued! We might be able to roll you into the beta program. Its is a limited program but if you have special needs, then we have a place for you. If you truly think the networks today can’t meet your needs then we might have a special place for you. Send us an email or click here. Deploying the beta is very easy. If you have a rack of servers (with 1, 10, or 40gigE ports), then that is all we need. If you are dealing with large number of Virtual Machine on servers where the server runs some variant of Linux and KVM/Xen, then you should definitely talk to us. Join our Beta program or call us to learn more.

How does Openflow, SDN help Virtualization/Cloud (Part 3 of 3) – Why Nicira had to do a deal?

The challenges faced by Openflow and SDN

This is the 3rd and final article in this series. As promised, lets look at some of the challenges facing this space and how we are addressing those challenges.

Challenge 1 – Which is why Nicira had to get a big partner

I have seen a lot of article about Nicira being acquired. The question no one has asked is – if the space is so hot, why did Nicira sell so early? The deal size (1.26B) was hardly chump change but if I were them and my stock was rising exponentially, then I would have held off in lure of changing the world. So what was the rush? I believe the answer lies in some of the issues I discussed in article 2 of this series a few months back–the difference between server (Controller-based) and switch (Fabric-based) approaches. The Nicira solution was very dependent on the server and the server hypervisor. The world of server operating systems and hypervisor is so fragmented that staying independent would have been a very uphill battle. Tying up with one of the biggest hypervisors made sense to ensure that their technology keeps moving forward. And Vmware is good company driven by solid technology. So, perhaps, the only question is how long before the Vmware/EMC and Cisco relationship comes unhinged?

Challenge 2 – The divide between Control place and Data Plane

The current promise of having a standard way of controlling networking and a controller that is platform independent is huge. It provides simplified network management and rapid scale for virtual networks. Yet the current implementations have become problematic.

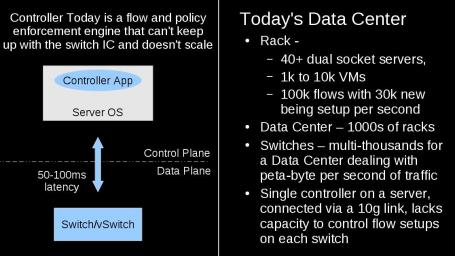

Since the switches are dumb and do not have global view, the current controllers have turned into a policy enforcement engine as well i.e. new flow setup requires a controller to agree which means every flow needs to go through the controller which instantiates them on the switch. This raises several issues:

- A controller which is essentially an application running on a server OS over a 10gbs link (with a latency of tens of milli-second) is in charge of controlling a switch which is switching 1.2 Tbps of traffic at an average latency of under a micro second and deals with 100k flows with a 30% being setup or torn down every second. To put things in perspective, a controller takes tens of millisecond to set up a flow while the life of a flow transferring a 10Mb data (typical web page) is 10 msec!!

- To deal with 100k flows, the switch chips need to have that kind of flow capability. The current (and coming generation) of chips have no where near such capability so one can only use the flow table as a cache which brings the 3rd issue.

- Flow setup rate is anemic at best on the existing hardware. You are lucky if you can get 1000 flows per second.

So what is lacking is a Network Operating system on the switch to support the controller App. If you look at the server world, the administrator specified the policies and its the job of the OS working very closely with the H/W to enforce the policies. In the current scenario it feels like a application running on the bare metal with no Operating System support. Since this is a highly specialized application, it needs a specialized Operating system – a Network Operating system which can also be virtualized.

Challenge 3 – The Controller based Network

For a while, people were just tried to their inflexible networks which didn’t see any innovation in last two decades while the server and storage went through major metamorphosis. That frustration gave birth to Openflow/SDN which has currently morphed into a controller mania. Moving the brain from body and separating them creates somewhat of a split brain problem since the body (or switch in this case) still needs somewhat of a brain. What we need is a solution that encompasses the entire L2 fabric and the controller and Fabric work as one while providing easy abstractions for user to achieve their virtualization, SLA and monitoring needs.

A Distributed Network Hypervisor or Netvisor to the rescue

So what we (at Pluribus) saw early on that the world of servers is a very good example. The commoditization of chips and value moving to software is pretty much whats happening in the world of storage and is bound to happen in the world of networking. So we decided to do things in the right order i.e. get the bleeding edge commodity chips and create a Network Operating System with the following properties:

- Network OS – since a switch chip is very specialized and powerful chip.

- Distributed – There are always more then 1 switches in the network which need to work in tandem to support end-to-end flow

- Virtualized – Ability to run physical and Virtual Networking applications – As I mentioned before, a switch is not the network. We need to deal with all network services in physical and virtual form and the network OS needs to support that

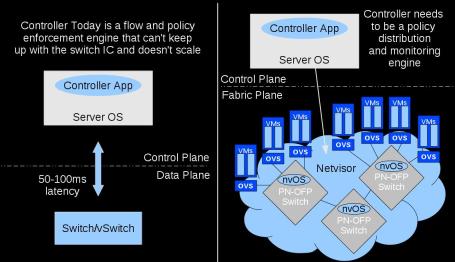

Hence we created a Distributed Network Hypervisor called Netvisor or nvOS for short. Its designed to run on the switches and support virtual and physical network services. It also runs a controller on itself where the controller is a policy distribution engine and no longer a policy enforcement engine.

As the above figure shows, the current line drawn between the control plane and data place is not going to scale and perform. The line we originally drew (the founding principle of PLuribus Networks) need to be delivered for SDN and Openflow to deliver its true promise.

How does Openflow, SDN help Virtualization/Cloud (Part 2 of 3)

Using Openflow – state of the ART

In my last article I discussed the components of Openflow and building blocks of a Software Defined Network. In this part, let me discuss some of the things people are doing to make it all work. One of the pieces that needs to be discussed beforehand is the various ways in which a packet can be matched against a flow and what kind of actions can be taken.

Flow Classification and the split between Hardware and Software

A flow is a simple mechanism to identify a group of packets on the wire. So a packets coming from a particular machine can be identified by the machines MAC or IP addresses which appears as source MAC in L2 header or source IP in L3 header. By putting a flow rule around either of those fields and just counting the packets going through the switch that hit that rule, we can determine the number of packets being sent by the machine. Its useful information. To make it more useful, one could add another flow to measure the packets going to our target machine. Adding a destination MAC or destination IP rule based on the machines MAC or IP address will accomplish that and using our 2 flows, we can find out how many packets are coming and going out of the machine.

The next question is, who is implementing the rules we just discussed above. There are several options and advantages/disadvantages of each approach:

- Server Based Approach – It is the easiest way since the server already has to process the packets and it can keep easily keep track of some statistics as well. The issue is when its not a real server but a virtual machine on the server that we want to track. We can still let the hypervisor track the packets or ask the Virtual Machine to track it. The big disadvantage of this approach is that asking the server to do things on your behalf needs certain level of trust (security holes and digital certificates come to mind), depends on Operating System capability and is a directly proportional to lower performance. Since the server hypervisor has to classify or assign packets to flow and take some actions, it needs to see the packets making hardware based virtualization (SR-IOV) impossible to adopt. Most of the data center bridging standards and IO virtualization standards are going towards hardware based switch in the server and doing things in the S/W layers of the server is not going to be possible. The other major disadvantage is scaling issues since as the number of servers grows, orchestrating policies becomes more difficult.

- Server based with H/W offload – There is more talk around this than real implementations but its worth mentioning that people have have discussed putting special capabilities in the NICs on server to offload some flow processing. The advantage is performance and security (since the Hypervisor controls the NIC, the Virtual Machine can’t circumvent it). The disadvantage is cost and scaling issues. The chips capable of doing this (TCAMs etc) are expensive and trying to orchestrate across large numbers of servers severely limits the scale. We are already seeing Intel Sandy Bridge architecture coming to life which is integrating 10GigE NICs. Adding TCAMs will increase the basic cost by $800-900 and also add significant complexity.

- Probe Based Approach – Have probes in the network analyze flows. There are companies out there that specialize in inserting probes in the network and collecting the data that can do this well as long as you only want to observe things. If redirection, traffic shaping, header modification or other actions are needed, these passive probes will not work. In addition inserting them requires intrusive work in cabling etc. Needless to say, this is one of the least favorite approach.

- Switch based approach – Since all the traffic passes through the switches anyway, having them deal with flows and take associated action makes lot of sense. The modern switch chip have H/W based CAMs and TCAMs which can take a rule and do the needful without adding to the latency or throughput of the packet stream. In my past life, as Architect of Solaris Networking and virtualization, I have done the software based approach but given the growing Virtual machine density, SR-IOV type features and growing need for analytics and traffic shaping with performance, I think the switch based approach is far superior. So the CAM and TCAM measuring flows is the Hardware piece. The software piece is ability to add and delete rules on the fly. And Openflow provides a pseudo standard that allows a programmer to work and program any switch. But the biggest advantages are scale, ease of use, and administrative separation of this approach. The scale comes from orchestrating your flows and policies across less number of devices (one switch for approx 50 servers). Also, the people in charge of networks and storage networks are at times different and keeping the administrative separation is useful although not required.

So needless to say, I have currently taken the approach of solving this problem on the switch in conjunction with coordinating with the host using standards like EVB/DCB etc which we will discuss at a later time. Given that new generation switch chips are pretty similar to the server CPU and have same complexity as a server CPU, the problem begs a real Operating System on top which can help us write openflow based applications. This is where we step in. One part of Pluribus Networks effort is around implementing a distributed network Hypervisor (called Netvisor™ a key component of the nvOS™ Operating System) to give openflow programmers real teeth. We treat any switch chip the same as server chips and most of the code is platform independent with very little that is written to the chips instruction set. Just the same way same Linux code (with little platform specific stuff) runs on x86 and Power or Opensolaris code runs on x86 and Sparc.

Current implementations

So a little overview of projects and people who are leading the charge in the brave world of flows and Software Defined Networking. Before raking me over coal on missing things, the stuff below is what I consider mainstream implementations that apply in world of data centers today (Disclaimer: I have purposely left out most of the research efforts that didn’t reach a mainstream product since there are too many):

- The discussion has to start with project Crossbow which I believe is the first flow implementation with dedicated H/W resources approach that was available in OpenSolaris in 2007 and finally shipped in Solaris 11 (delayed due to Oracle/Sun merger). The virtual switching in Host and H/W based patents (7613132, 7643482, 7613198, 7499463, etc) were filed by me and fellow conspirators from 2004 onwards and awarded in 2009 onwards. Keep in mind that when Crossbow had virtual switching with a H/W classifier running in OpenSolaris, Xen etc were just coming out with S/W based bridging. The 2 commands – flowadm and dladm allow users to create Flows and S/W or H/W based virtual NICs that can be assigned to virtual machines. This is the Server Based Approach discussed earlier that ships in main stream OS and is pretty widely deployed.

- A approach similar to above was later adopted by our fellow company Nicira in form of their NVP Architecture. They enhanced the offering by allowing a Openflow based Orchestrator to control the virtual switching in the host although their focus has primarily been on virtualizaton size and not so much on application flows side.

- Another of our sister and partner companies, Big Switch Networks has taken a hybrid approach of orchestrating any openflow capable device which can be a switch or a virtual switch inside a hypervisor. Since they are still in stealth partially, it would not be my place to talk details.

- Obviously, every existing network vendor claims that they are working on SDN and openflow. But by definition, SDN requires programmability and Operating Systems to run your programs on. Most of the existing Network vendors lack the know how or the ability to do this. They have rich bank balances and if they can acquire the right companies and leave them alone, then they can potentially bridge the chasm (although it is going to be painful).

And then its our effort at Pluribus Networks. Its a well kept secret that we are building Server-Switches that runs Netvisor™ which has massive flow capabilities and would be ideal for all the people developing things in SDN space. But then we are in stealth mode and there is lot more to us which we will get around to discussing in coming days.

How does Openflow and SDN help Virtualization/Cloud

Introduction to Software Defined Networking and OpenFlow

Often time I hear the term Openflow and Software Defined Networking Networking used in many different context which range from solving something simple and useful to literally solving the world hunger problem (or fixing the world economy for that matter). I often get asked to explain the various aspects of how Openflow is changing our lives. So here goes a explanation of the religion called Openflow (and Software Defined Networking) and various ways its manifesting itself in our day to day life. Again its too much to write in one article so I will make it a series of 3 articles. This one focuses on the protocol itself. The 2nd article will focus on how people are trying to develop it and some end user perspective that I have accumulated in last year or so. The last article in series will discuss the challenges and what are we doing to help.

Value Proposition

The basic piece of Openflow is nothing more than a wire protocol that allows a piece of code to talk to another piece of code. The idea is that for a typical network equipment, instead of logging in and configuring it via its embedded web or command line interface (the way you configure your home wifi router), you can get the Controller from someone other than the equipment vendor. Now technically and in short term, you are probably worse off because you are getting the equipment from one guy and the management interface from other guy and there are bound to be rough edges.

Openflow creates a standard around how the management interface or Controller talks to the equipment so the equipment vendors can design their equipment without worrying about the management piece and someone else can create a management piece knowing well that it will manage any equipment that support Openflow. So people who understand standards ask whats the big deal? I still can’t do more than what the equipment is designed to do!! And that is the holy grail around any standard. By creating the standard, you are separating the guys who make equipment to focus on their expertise and guys doing management to make the controllers better. This is in no way different than how computers work today. Intel/AMD creates the key chips, vendors like Dell, HP etc. create the servers and Linux community (or BSD, OpenSolaris, etc) creates the OS and it all works together offering a better solution. It achieves one more thing – it drives the H/W cost lower and creates more competition while allowing a end user to pick the best H/W (from their point of view) and the best controller based on features, reliability, etc. There is no monopoly, plenty of choices and its all great for end user.

Specially in the networking space where innovation was lacking for a while and few companies were used to huge margins because users had no choice. One trend that is driving the fire behind SDN is virtualization. Both Server and storage side (H/W and OS) have made good progress on this front but Network is far behind. By opening up the space, SDN is allowing people like me (who are OS and Distributed Systems people) to step into this world and drive the same innovation on network side. So Openflow/SDN are great standards for the end user and people who understand it see the power behind it.

Key Features

Openflow Spec 1.1.2 is just out with minor improvements while 1.1.1 has been out for few months. Most of the vendors only have 1.0.0 implemented. So if you look at the spec, you will see data structures and message syntax needed for a controller to talk to a device it wants to control. Functionality wise, its can be grouped in following parts (understand that I am trying to help people who don’t want to read hundreds of pages of specs):

- The device discovery and connection establishment part where you tie in a controller to a device that it wants to control.

- Creating the Flows. In a typical network, there is different type of traffic mixed in, packets for which can be grouped together in the form of flow. If you look at layer 2 header, packets for the same VLAN can be a flow, packets belonging to a pair of mac addresses can be a flow and so on. Similarly packets belonging to a IP subnet or IP address plus TCP/UDP port (service) can be termed as a flow. Any combination of Layer 2, 3 and 4 headers that allows us to uniquely identify a packet stream on the wire is term as flow and Openflow protocol makes special efforts to specify these flows. A Openflow control can specify a flow to a switch which can apply to specific ports or to all ports and ask the switch to take special actions when it matches a packet to a flow.

- Action on matching a flow. As part of specifying the flow, the protocol allows the controller to specify what action to take when a packet matches the flow. The action can range from copy the packet, decrement Time to Live, change/add QoS label, etc. But the most important action (in my view) is the ability to direct the original packet (or a copy) to specific port or to the controller itself.

- Flow Table where the flows are created. For actual device, this is typically the TCAM where the flow is instantiated and applied to incoming packets. Most of devices are pretty limited by this and can typically support a very small set of flows today. The protocol allows for specifying multiple tables and the ability to pipeline across those tables but given the state of today’s and mid term hardware, single table is all we can work with.

- The the last piece is the Counters. Most of the devices support port level counters which the openflow controllers can read. In addition the protocol supports flow level counters but the current set of devices are very limited on that as well.

Putting it all together

So now we understand the components, we can see how it works. A controller (which a piece of code) running on standard server box starts and discovers a device that it wants to manage. In today’s

world, that device typically is a ethernet switch. Once connected, it puts the device under it control and sets flow with actions and reads status from the device.

As an example, assume that a user is experimenting with new Layer 3 protocol and he can add a flow that makes the switch redirect all matching packets to the controller where the packet gets modified appropriately and redirect through a specific egress port on the device. Much easier to implement since controller itself is a piece of code running on standard OS so adding code to it to do something experimental is pretty easy. The most powerful thing here is that the user is not impacting the rest of the network and doesn’t need his/her own dedicated network.

My own favorite (that we have experimented with) is debugging application for a data center or enterprise where the user needs to debug his own client/server application. The user can try and capture the packets on multiple machines running his clients and server but the easier thing would be to set a flow on the switch based on server IP address and TCP port (for the service) and a action that allows a copy of all matching packets to be sent to the controller with a timestamp. This allows the user to debug his application much more easily.

Again, the power of Openflow and Software Defined Networking is that it allows people to innovate and requires someone to solve their problem by writing simple code (or use code provided by others). Its important to keep in mind that switch is a really powerful device since everything goes through it and allowing it to be controlled by C, Java, or Perl code is very powerful. The control moves from the switch designer to application developers (to the discomfort of the switch vendors 🙂

So finally, how does it help Virtualization and Cloud?

This is the reason why I am so excited and ended up spending time writing the blog. The key premise in world of virtualization is dynamic control for resource utilization. Again, network utilization and SLA are important but the key part we need to solve is the utilization of servers. The holy grail is a large pool of servers each running 20-50 virtual machines that are controlled by Software which optimizes for CPU/memory utilization. The key issue is the Virtual Machines are grouped together in terms of application they run or the application developer that controls them. To prevent free for all, they typically are tied together with some VLAN, ACL code, have a network identity in terms of IP/MAC addresses, and SLA/QoS etc. For the controlling Software to migrate the VM freely, it want to manage the VM network parameters on the target switch port as well. And this is where the current generation of switches fail. They require human intervention to configure the various network parameters on the switch that match the VM.

So in order for a VM to migrate freely under software control, it still requires human intervention on the network side. With Openflow, the Software orchestrating the server utilization by scheduling the VMs based on policies/SLA, can set the matching network policies without human intervention.

Just the way a typical server OS has a policy driven schedular which control the various application threads on dozens of CPUs (yes even a low end dual socket server has 6 core each with multiple hardware threads), the Openflow allows us to build a combined server/storage/network scheduler that can optimize the VM placement based on configured policies.

Again, Openflow is just a wire protocol and a pseudo standard but it allows people like me add huge value which wasn’t possible before. In next article, we will go deeper into what people are trying to build and look at some more specific use cases. Stay Tuned and Happy Holidays!!

Network 2.0: Virtualization without Limits

So the theme of the day is Network Virtualization, Software defined networks and taking virtualization to its logical conclusion i.e. server, storage and network in a giant resource pool that can be allocated/assigned any which way. Although its easier said then done. Server and Storage virtualization were a bit simpler since we were dealing with one OS that needed to provide the right abstraction layer. The H/W resource pool (disk, cpu, network, memory, etc) was managed by the single OS so provisioning it between various virtual machines or storage pool was a bit simpler. The network by definition is useful only when multiple devices are connected and trying to treat them as a single resource pool is harder. A virtual networks has to deal with not just links, bandwidth, latency and queues but also

higher level functionality like routing, load balancing, firewalling, DNS, DHCP, VPN, etc. etc. And we haven’t even talked about how this all will hook up together along with virtual machines and virtual storage pool in a easy manner. Now before you argue that every component is already virtualized (which is very true), one could argue that it still doesn’t give me a virtual network. It is same as someone wanting a dinner and is instead served raw potatoes, onions, tomatoes, eggs, etc and shown the stove to make his own Omelette.

So having pioneered virtual switching and resource control in the server OS (Solaris to be specific – the project was called Crossbow that I started in 2003 and got integrated in OpenSolaris in 2008), I set out to do the same for larger networks in the form of Pluribus Networks Inc and apply the hard lessons we learned from enterprise customers. This is what we call Network 2.0: Virtualization without Limits. The real reason it is a tough problem to solve is due to switching needing to be very high performance and low latency. It forces all the switching functionality to be inside a very highly complicated ASIC which does all the hard work in shuffling 1.2 Terabits per seconds of data and sub micro second latencies and as such doesn’t need much software on top. The embedded OS controlling the switch is mostly used for just configuring the switch chip using a cli (command line interface) that allows the administrator to control and configure each component on the switch but almost nothing else. So when we started playing with some of the prototype next generation boxes that our friends at Fulcrum and Broadcom gave us, we just kept asking if I could have a real OS running the chip to be able to do something more useful. So we asked our friends again if there was someway to put a full fledged OS on top (being the OS person I have been for most of my life). And that was when I realized that to solve the network virtualization problem, we really need a OS that understand resource pools and virtualization on the chip. But a single switch by itself is not very interesting so we need a OS that controls all the switches. Hmm – one OS that controls them all (borrowing from LOTR which reminds me to ask Peter Jackson whatever happened to the prequel)!! So before we can even start building anything more complicated, we built a network hypervisor that has semantics similar to a tight coupled cluster but controls a collection of switches and scales from one instance to hundred plus instances.

The Network OS is finally taking life and is able to treat the network exactly as a one giant resource pool. Please don’t confuse the Network OS with typical management layer that manages a collection of devices. We do still need a management layer to configure and manage the OS but the policy enforcement, congestion control and resource management across all devices is done by the OS. It is same as a server cluster doesn’t get rid of the management layer but actually gives the management layer something that is more manageable.